Security Risks in Cloud-Native Software Enterprises.

As organisations increasingly adopt cloud-native technologies to fuel innovation and scalability, securing these dynamic environments has become more complex than ever. The attack surface is vast and multifaceted, encompassing everything from containerized applications and Kubernetes orchestration to CI/CD pipelines and third-party integrations. This article series tackles the most pressing security challenges in modern cloud-based ecosystems, covering critical topics like container vulnerabilities, API security, supply chain risks, and privilege escalation. Through actionable insights and strategies, we aim to empower developers, security teams, and IT leaders to build and sustain resilient, secure environments capable of thriving in an ever-evolving threat landscape.

Container Vulnerabilities

Containers have revolutionized application deployment, offering scalability and efficiency. However, they also introduce unique security risks that attackers can exploit. Common vulnerabilities include running containers with excessive privileges, outdated base images with known CVEs (Common Vulnerabilities and Exposures), and misconfigured networking that exposes sensitive workloads. A compromised container can become an entry point for attackers to move laterally, escalate privileges, or exfiltrate data. Without proper security controls, containerized applications can become just as—if not more—vulnerable than traditional workloads.

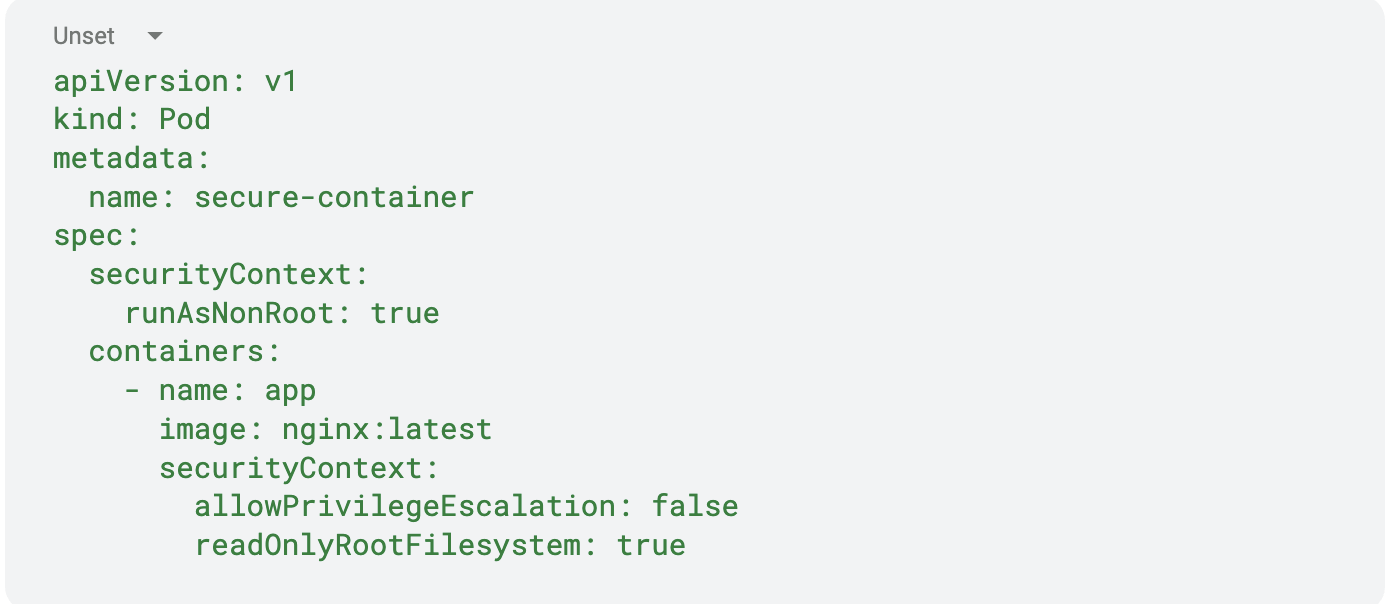

One practical way to mitigate container security risks is by enforcing the principle of least privilege and scanning for vulnerabilities in base images. For example, in a Kubernetes environment, you can restrict container privileges using a Pod Security Policy or Security Context. Below is a Kubernetes YAML snippet that ensures containers run as a non-root user and have read-only access to the filesystem:

This configuration prevents privilege escalation, ensures the container runs as a non-root user, and restricts filesystem modifications—reducing the risk of an attacker gaining control over the container. By combining such security best practices with continuous image scanning, regular updates, and network segmentation, organizations can significantly reduce their container attack surface.

Insufficient API Security

APIs are the backbone of modern cloud-based applications, enabling seamless communication between services. However, if not properly secured, APIs can become a significant attack vector, exposing sensitive data and functionality to unauthorized users. Common API security risks include weak authentication, lack of rate limiting, excessive permissions, and exposure of internal endpoints. Attackers often exploit these weaknesses through API enumeration, injection attacks, or credential stuffing, potentially leading to data breaches, account takeovers, or service disruptions. Given the prevalence of APIs in cloud based environments, securing them is critical to preventing unauthorized access and ensuring data integrity.

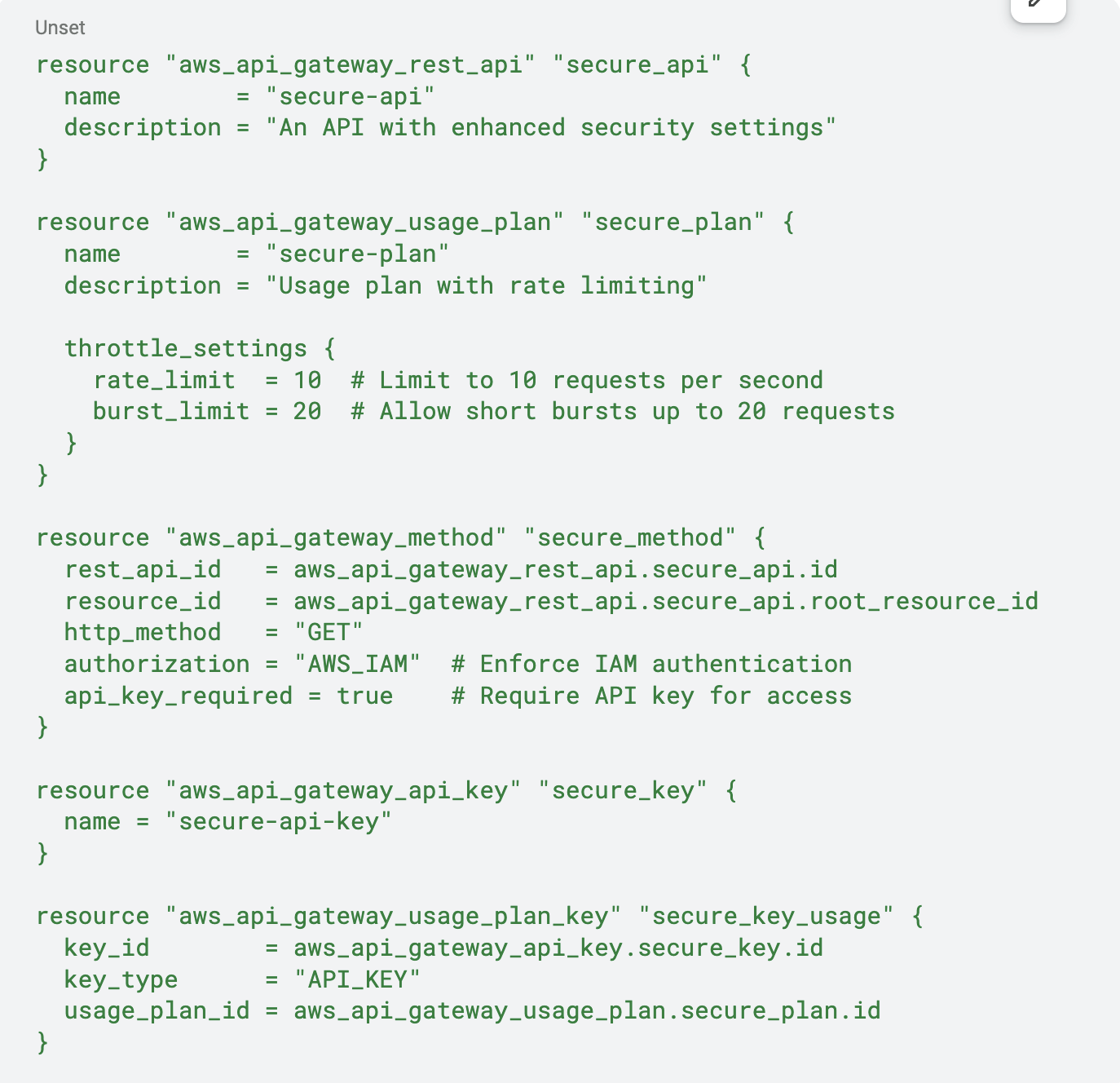

A practical way to enhance API security in AWS is by enforcing authentication, authorization, and throttling using AWS API Gateway. Below is a Terraform configuration that applies an API key requirement, usage plan with rate limiting, and IAM-based authentication to an API Gateway deployment:

This configuration enforces IAM-based authentication, ensuring that only authorized AWS users or roles can access the API. It also requires an API key, providing an additional layer of access control. Additionally, the usage plan limits API abuse by restricting the number of requests per second, mitigating DDoS-like attacks and preventing resource exhaustion. By implementing these security controls, organizations can significantly reduce the risks associated with API exposure while maintaining seamless and secure API interactions.

Supply Chain Attacks

Supply chain attacks have become one of the most dangerous threats in modern environments, targeting the software development lifecycle to compromise applications before they even reach production. Attackers exploit vulnerabilities in third-party dependencies, CI/CD pipelines, container registries, and package managers to inject malicious code or backdoors. High-profile incidents like the SolarWinds attack and Log4j vulnerability demonstrate how a single compromised component can have widespread consequences, affecting thousands of organizations. Since modern applications rely on open-source libraries, external APIs, and cloud services, a single weak link in the supply chain can lead to data breaches, service disruptions, and unauthorized access.

Mitigating supply chain attacks requires a multi-layered security strategy, including software bill of materials (SBOM) generation, dependency scanning, artifact integrity verification, and runtime protection. Tools like CycloneDX (for SBOM creation), Snyk and Trivy (for vulnerability scanning), and Harbor (for secure container image management) help organizations identify and remediate risks before deployment. In an upcoming deep dive, we’ll explore a detailed supply chain security framework, outlining best practices for securing dependencies, implementing cryptographic signing, and continuously monitoring for threats across the software lifecycle. Stay tuned for a hands-on guide to fortifying your software supply chain against emerging attacks.

IAM Role Misuse and Privilege Escalation

Identity and Access Management (IAM) is a cornerstone of cloud security, but misconfigured roles and excessive privileges can lead to devastating breaches. Attackers often exploit IAM role misuse through overly permissive policies, privilege escalation paths, and role assumption flaws to gain unauthorized access to critical resources. Misconfigured IAM roles in AWS, Azure, or GCP can allow lateral movement, data exfiltration, or complete infrastructure takeover. Similarly, weak Role-Based Access Control (RBAC) settings in Kubernetes can enable unauthorized users to escalate privileges, modify workloads, or access sensitive data. Without strict least privilege enforcement, cloud environments become prime targets for privilege escalation attacks.

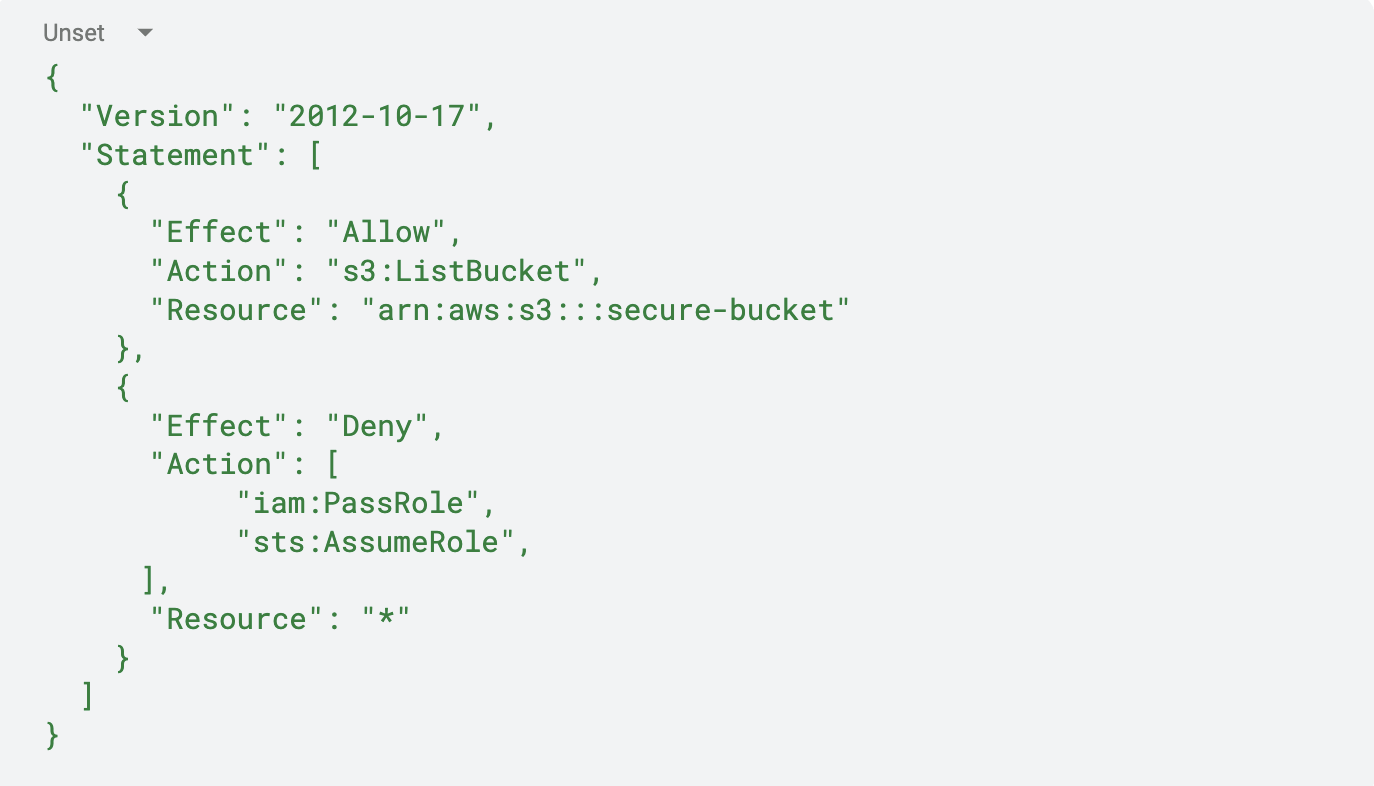

To mitigate these risks, organizations should implement the principle of least privilege (PoLP), enforce IAM policy restrictions, and audit role assignments regularly. Below is an example of a secure AWS IAM JSON policy that grants read-only access to an S3 bucket while preventing privilege escalation:

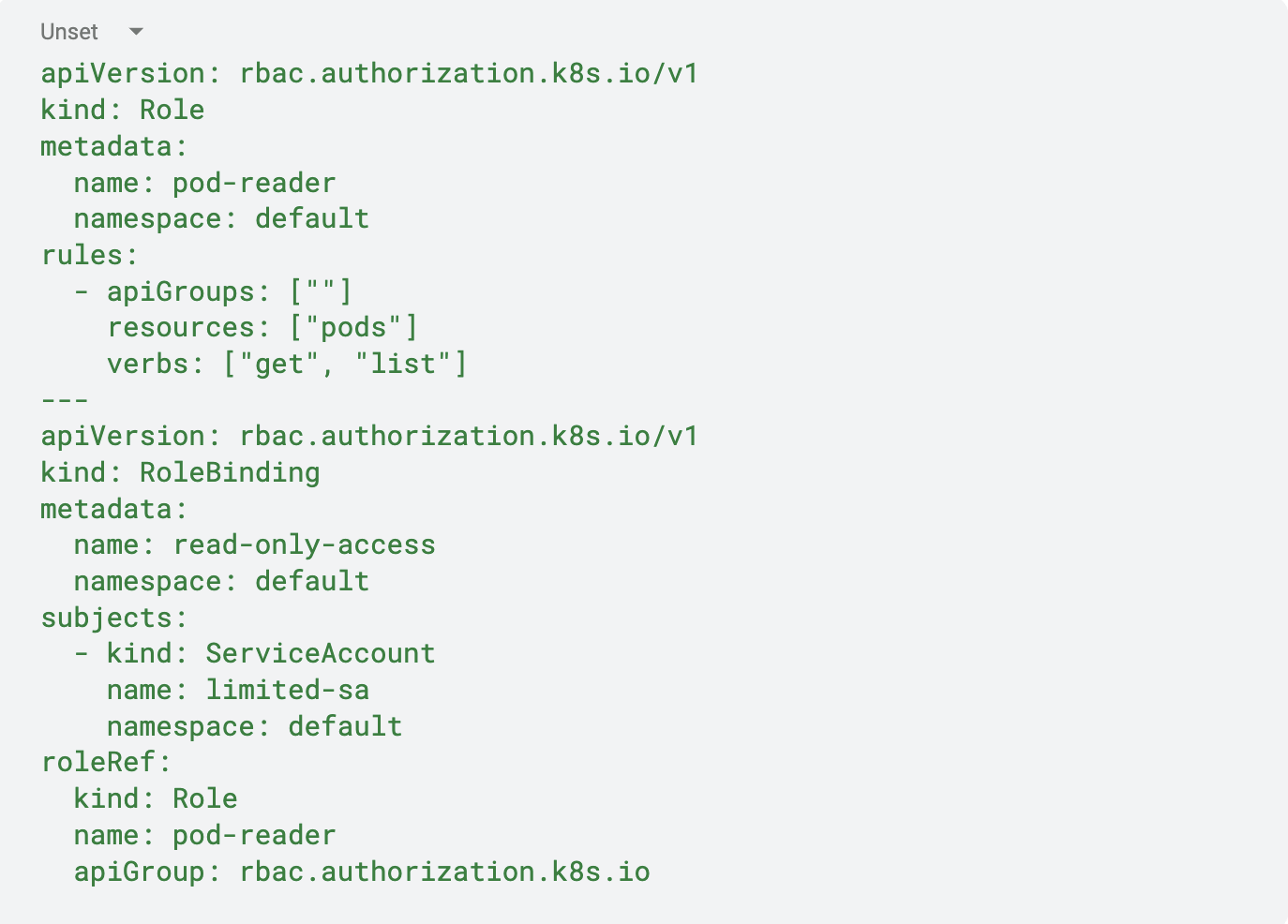

Similarly, in Kubernetes, RBAC can be configured to restrict access to sensitive resources. Below is an RBAC YAML policy that ensures a service account can only list pods but cannot modify them or escalate privileges:

By enforcing strict IAM policies and Kubernetes RBAC, organizations can prevent privilege escalation, reduce attack surfaces, and limit the impact of potential breaches. Regular auditing, automated policy checks, and real-time monitoring further strengthen security against IAM-based threats.

Insider Threats: A Hidden Risk in Cloud-Native Environments

While external threats often dominate the cybersecurity conversation, insider threats pose an equally significant risk to modern enterprises. These threats can originate from malicious actors within the organization, such as disgruntled employees, or from well-meaning individuals who inadvertently expose sensitive data or systems through negligence or lack of awareness. In cloud-enabled environments, where infrastructure is highly dynamic and access controls are often distributed, the potential for insider threats is amplified. For example, an employee with excessive permissions might accidentally misconfigure a cloud storage bucket, exposing sensitive data to the public internet, or a developer might intentionally exfiltrate proprietary code before leaving the company. The consequences of such actions can be devastating, ranging from data breaches and financial losses to reputational damage and regulatory penalties.

Addressing insider threats requires a proactive and comprehensive approach that balances security with operational efficiency. In an upcoming article, we will explore key strategies for mitigating insider risks, including:

• Least Privilege & Zero Trust – Ensure users have only the minimum permissions necessary, and assume no internal actor is inherently trustworthy.

• Strong Identity & Access Management (IAM) – Enforce multi-factor authentication (MFA), session monitoring, and strict role-based access controls (RBAC).

• Audit Logging & Security Monitoring – Implement SIEM (Security Information and Event Management) tools to detect anomalies in cloud environments.

• Immutable Infrastructure & GitOps – Prevent unauthorized modifications by enforcing infrastructure-as-code (IaC) principles.

• Behavioral Analytics & User and Entity Behavior Analytics (UEBA) – Use AI-driven monitoring to detect unusual user activity.

• Data Loss Prevention (DLP) & Encryption – Monitor and restrict unauthorized data transfers, ensuring sensitive data is encrypted at rest and in transit.

• Continuous Security Training & Insider Threat Awareness – Educate employees on the risks and warning signs of insider threats.

Stay tuned to learn how to build a resilient defense against insider threats while maintaining the agility and innovation that cloud-native technologies enable.

Inadequate Network Segmentation

Inadequate network segmentation refers to the failure to properly isolate workloads, services, and environments, allowing attackers or unauthorized users to move laterally within the infrastructure. Unlike traditional on-premises environments with well-defined network perimeters, cloud architectures rely on microservices, APIs, and dynamic scaling, making it essential to implement granular segmentation controls. Without proper segmentation, a single compromised container, pod, or API can expose the entire network, leading to data breaches, privilege escalation, or full infrastructure compromise.

Key Risks of Poor Network Segmentation:

• Lateral Movement: Attackers can pivot from a compromised service to other critical workloads if network rules are too permissive.

• Overexposed Services: Open Kubernetes namespaces, unrestricted VPC peering, or misconfigured security groups can expose internal services to attackers.

• Data Exfiltration: Poorly segmented environments make it easier for adversaries to extract sensitive data without detection.

• Lack of Environment Isolation: Mixing dev, test, and production environments without proper segmentation can allow attackers to escalate attacks from non-critical to critical systems.

How to Mitigate Inadequate Network Segmentation:

• Use Kubernetes Network Policies – Restrict pod-to-pod communication based on application needs.

• Enforce Zero Trust Networking (ZTN) – Require identity-based authentication for all internal service communications.

• Segment VPCs and Subnets – Use separate Virtual Private Clouds (VPCs), security groups, and private subnets for different workloads.

• Implement Service Mesh Security – Use tools like Istio, Linkerd, or Consul to enforce mTLS encryption and fine-grained service-to-service access controls.

• Monitor and Log Network Traffic – Use AWS VPC Flow Logs, GCP Packet Mirroring, or Azure Network Watcher to detect anomalous traffic patterns.

Proper network segmentation reduces the attack surface, prevents unauthorized access, and strengthens the overall security posture of cloud-native applications.

Kubernetes Security Risks Navigating the Complex Threat Landscape

Kubernetes has become the de facto orchestration platform for managing containerized applications, but its complexity and flexibility come with significant security challenges. As organizations scale their cloud-native workloads, they must contend with a variety of risks that can compromise the integrity, availability, and confidentiality of their clusters. Common threats include misconfigured Role-Based Access Control (RBAC), insecure API server exposure, vulnerable container images, and inadequate network segmentation. Additionally, issues like improper Secrets management, unpatched components, and privilege escalation through host access further exacerbate the risks. Without proper safeguards, attackers can exploit these weaknesses to gain unauthorized access, disrupt operations, or exfiltrate sensitive data. Understanding these risks is the first step toward building a secure Kubernetes environment.

In an upcoming article, we will dive deep into strategies for mitigating Kubernetes security risks, offering actionable insights to help you fortify your clusters. From enforcing least privilege and zero-trust principles to implementing robust network policies and runtime security measures, we’ll cover the essential practices for safeguarding your environment. We’ll also explore advanced techniques such as behavioral analytics for anomaly detection, immutable infrastructure to prevent unauthorized changes, and the importance of continuous monitoring and auditing. Whether you’re a DevOps engineer, security professional, or IT leader, this guide will equip you with the knowledge to address Kubernetes security risks effectively. Stay tuned to learn how to turn your Kubernetes deployment into a secure, resilient foundation for your applications on the cloud.

Insecure Data Storage and Transmission

Insecure data storage and transmission pose significant risks to cloud-native applications, where sensitive information is often distributed across dynamic and interconnected environments. Data at rest, such as databases, object storage (e.g., S3 buckets), and configuration files, can be exposed if encryption is not properly implemented or access controls are misconfigured. Similarly, data in transit, such as API communications, service-to-service interactions, and user traffic, is vulnerable to interception if transmitted over unencrypted channels or using weak cryptographic protocols. These risks are compounded by the complexity of modern cloud-native architectures, where data flows across multiple services, third-party integrations, and geographically distributed systems. Without robust safeguards, organizations face the threat of data breaches, compliance violations, and reputational damage.

To address these challenges, organizations must adopt a multi-layered approach to secure data storage and transmission. A service mesh, such as Istio or Linkerd, can enforce encryption-in-transit for all service-to-service communications, ensuring that data is protected as it moves through the network. For encryption at rest, leveraging cloud-native solutions like AWS KMS, Azure Key Vault, or Google Cloud KMS ensures that sensitive data stored in databases or object storage is securely encrypted. Secrets management tools, such as HashiCorp Vault or AWS Secrets Manager, help securely store and manage credentials, API keys, and certificates, reducing the risk of accidental exposure. Additionally, best practices for object storage—such as enabling versioning, access logging, and strict bucket policies—can further enhance security. By combining these strategies, organizations can build a resilient defense against insecure data storage and transmission, safeguarding their cloud-native applications and infrastructure.

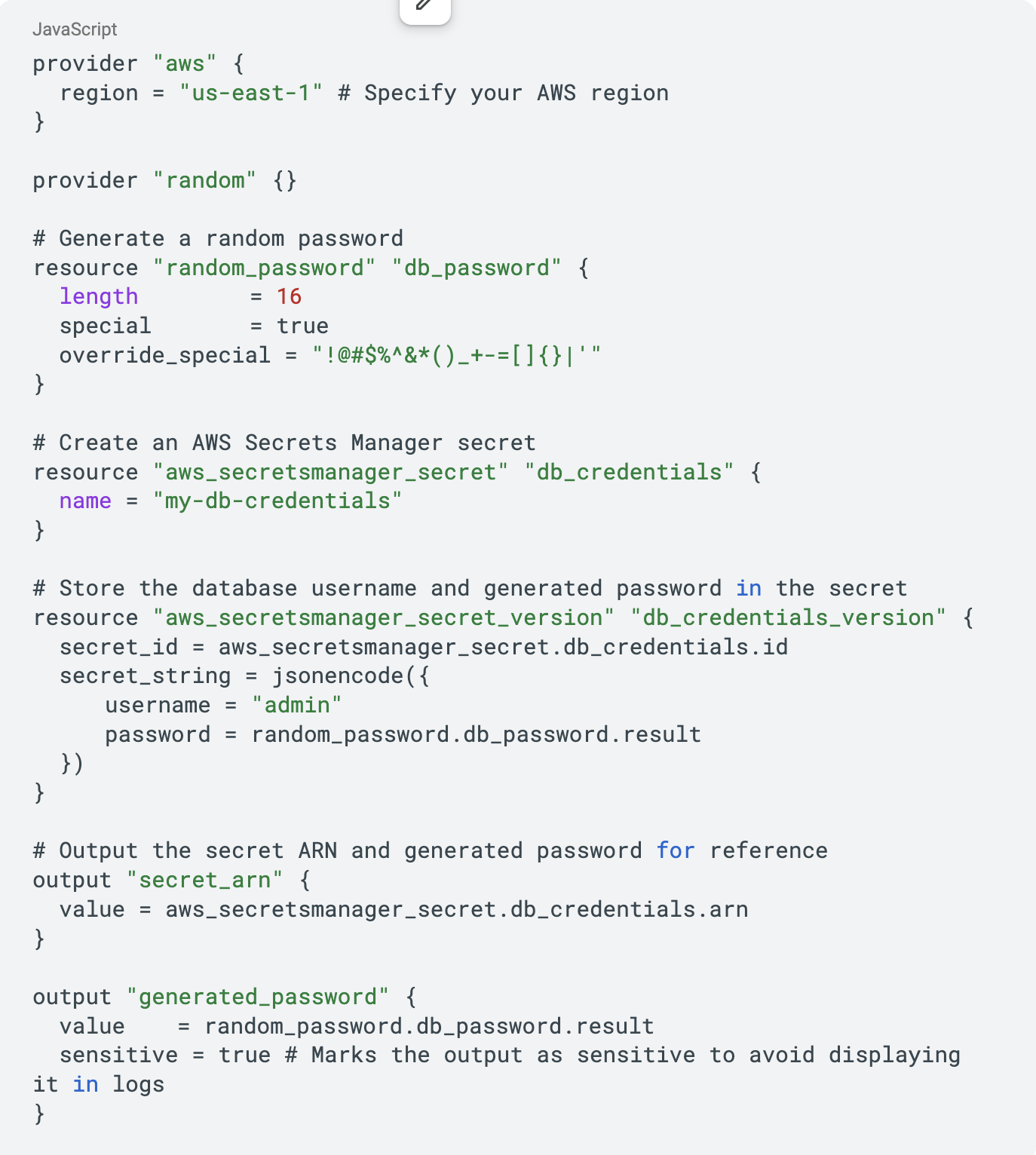

Below is a Terraform snippet that demonstrates how to create an AWS Secrets Manager secret and use its value in another resource, such as an AWS RDS database instance. This example shows how to securely manage sensitive information (e.g., database credentials) using Secrets Manager and integrate it into your infrastructure-as-code workflow.

Lack of Monitoring and Logging: A Silent Threat to Cloud-Native Environments

In cloud-native environments, where applications and infrastructure are highly dynamic and distributed, the lack of robust monitoring and logging can leave organizations blind to critical issues such as performance bottlenecks, security breaches, and system failures. Without comprehensive visibility into the health and behavior of your systems, detecting and responding to incidents becomes a reactive and often delayed process. For example, an unnoticed memory leak in a microservice could lead to a cascading failure, or an undetected intrusion could result in a significant data breach. Additionally, compliance requirements often mandate detailed logging and monitoring, making their absence not just a technical risk but also a legal and regulatory one. In a world where downtime and data breaches can have severe financial and reputational consequences, the absence of monitoring and logging is a risk that organizations cannot afford to take.

To address these risks, organizations can implement a comprehensive monitoring and alerting stack using Prometheus, Alertmanager, and Grafana on Kubernetes. Prometheus, a powerful open-source monitoring and alerting toolkit, is designed for cloud-native environments and excels at collecting and querying metrics from Kubernetes clusters, applications, and infrastructure. Alertmanager, a companion to Prometheus, handles alerts generated by Prometheus, deduplicates them, groups related alerts, and routes them to the appropriate notification channels (e.g., email, Slack, PagerDuty). When paired with Grafana, a visualization and analytics platform, teams can create dashboards that provide real-time insights into system performance, resource utilization, and application behavior. Setting up this stack on Kubernetes involves deploying Prometheus and Alertmanager as part of your cluster, configuring Prometheus to scrape metrics from your workloads, defining alerting rules, and using Grafana to visualize the data. The benefits are immense: proactive detection of anomalies, faster incident response through timely alerts, and the ability to correlate metrics across services for root cause analysis. Together, these tools empower teams to maintain operational excellence, ensure security, and meet compliance requirements in even the most complex cloud-native environments.

Conclusion

As organizations increasingly adopt cloud-native technologies to fuel innovation and scalability, addressing security risks has become a top priority. The challenges are diverse, ranging from container vulnerabilities and API security gaps to supply chain attacks and insider threats. However, by leveraging the right strategies—such as enforcing least privilege, deploying robust monitoring and logging systems, and utilizing tools like Prometheus, Grafana, and Alertmanager—teams can create secure, resilient environments ready to face evolving threats.

This series aims to dive into the most pressing security risks for cloud-native enterprises, providing actionable insights and real-world examples to guide developers, security professionals, and IT leaders. By embracing a proactive, layered security approach, organizations can not only reduce risks but also fully harness the power of cloud-native technologies. As threats continue to grow in sophistication, staying informed and proactive will be essential for maintaining a secure and innovative cloud ecosystem. Keep an eye out for in-depth articles covering these topics as we work together to build a safer digital future.

Secure your business, not just your infrastructure – Get the Cloud Security Strategy Blueprint.