Enhancing Data Security and Reliability in LLMs with RAG Architecture

In a landscape where artificial intelligence is constantly pushing boundaries, the emergence of Large Language Models (LLMs) is both a marvel and a challenge. LLMs have propelled AI into new realms of possibility, but not without bringing inherent pitfalls, particularly in data security and content accuracy. Retrieval Augmented Generation (RAG) architecture is emerging as a breakthrough solution to navigate this difficult terrain.

LLMs have brought the use of artificial intelligence to a wider audience. But like any new technology, they come with their own challenges, like generating plausible but factually incorrect content or the handling of sensitive data by LLMs raises critical privacy and security concerns. These issues underscore the need for innovative solutions that can enhance the accuracy and safety of LLM outputs.

This is where the Retrieval Augmented Generation (RAG) architecture comes into play. RAG offers a promising approach by integrating external, verified data sources into the language generation process, addressing both the accuracy and data security issues inherent in traditional LLMs.

Why RAG Matters: Addressing Hallucinations and Data Security

Addressing hallucinations and data security, RAG takes a novel approach and provides a more controlled environment. It shifts away from conventional language processing methods by merging the strengths of neural network-based language models with information retrieval systems.

One of the inherent challenges with LLMs is their tendency to produce 'hallucinations' - generating content that, while plausible, may not be accurate, which can lead to misinformation and reliability concerns. RAG addresses this issue by retrieving and referencing actual data during the response generation process, grounding the LLM's outputs in factuality. This hybrid model enhances the ability of LLMs to generate correct and contextually relevant responses.

In addition, there are legitimate concerns with traditional LLMs regarding data protection and data security, particularly of sensitive company data, which require urgent protection measures. Separating the processing of the model from the relevant data significantly reduces the risk of unintentional data disclosure and ensures that sensitive information remains safeguarded.

Deep Dive: How Does RAG Work?

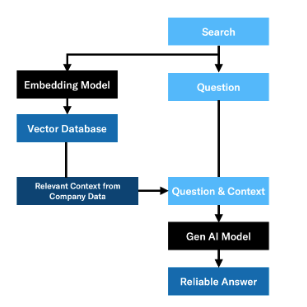

At its core, RAG operates in two distinct phases: retrieval and generation. In the retrieval phase, the model queries a database of information to find relevant data that matches the input query. This process is powered by algorithms that can efficiently sift through vast amounts of data to find the most relevant pieces of information. Once the relevant data is retrieved, it's fed into the generation phase, where the language model uses this information to craft responses that are not only coherent but also factually grounded.

Technically, RAG combines the capabilities of a powerful language model with an external knowledge source, typically a large database. The integration of these two components allows RAG to dynamically pull in relevant information as needed, which it then uses to guide the language model's response generation. This method not only improves the accuracy of the responses but also allows for more nuanced and informed content generation.

Conclusion and Outlook

The implementation of RAG architecture is a step forward in making AI more reliable and secure. As we continue to explore the capabilities of RAG at Hivemind Technologies, we see immense potential in its application across various industries, from healthcare to finance, where accuracy and data security are paramount.

Journey Beyond the Ordinary

with Hivemind's Expertise

Hivemind Technologies stands ready to guide your business or organisation in harnessing the full potential of LLMs with RAG. Whether it's integrating these models into existing systems or exploring new applications, we offer the expertise and insights to navigate this new era of AI-driven innovation.

Get in touch to see how we can customise LLMs for your specific needs, and be part of a journey where technology goes beyond the ordinary.