Could Kubernetes have existed without Docker?

In 2013, on a sunny day in Santa Clara at PyCon, a young developer named Solomon Hykes took the stage to present his talk titled "The future of Linux Containers".

So he opened his laptop and started to talk about an open source project capable of packaging your application, setting up the networking and deploying it in your Linux machine.

The people that were attending the conference immediately got the potential of that technology because they felt that something is changing in the software industry.

This software is called Docker.

Three months later, at dotScale 2013 in Paris, Solomon presented Docker and, with confidence and a strong presence, he proclaimed that Docker would revolutionize software development and production in the future.

Despite the skepticism of some in the audience, Solomon's conviction in his vision was unwavering. He did not arrive that day in a DeLorean, but his ideas about the future of application development were bold and forward-thinking. So why he was so sure about that?

Was it just a pumped-up marketing declaration?

Docker in the sky (with Containers) ?

The feeling at that time is that something going on with container technologies, there was quite a buzz for that time in Silicon Valley.

In fact, about 10 years before Salomon’s presentation, some big tech companies like Google, FreeBSD, and Solaris, already adopted the Linux container technologies.

The problem was managing the internal application through Linux containers was developed and customized for internal purposes, and were really complex to configure, so you need a lot of expertise and big hardware infrastructure, so then accessible to a few groups of experts.

Docker did, it developed a platform-independent container manager system, which is accessible to anyone on a big scale so that you can run Docker from your laptop to the cloud, and extremely flexible because you can develop your software in any language you can like Scala, Ruby, Python or Golang and put in a box called a "container".

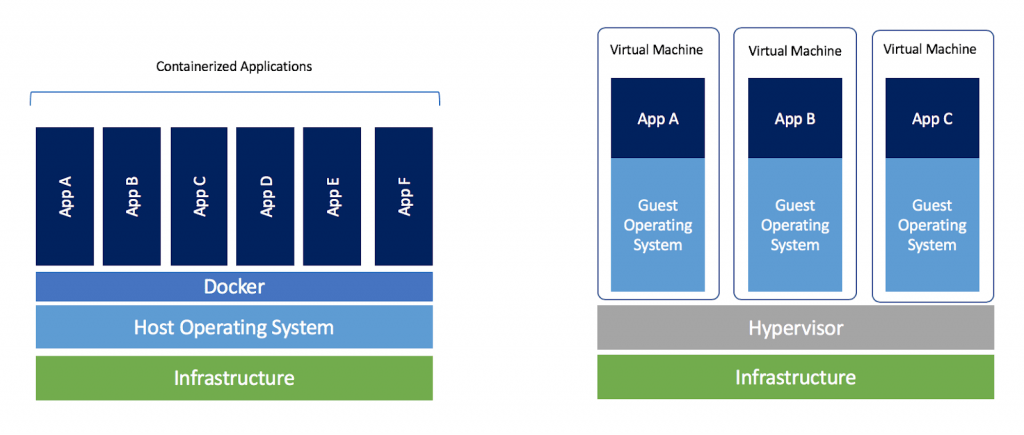

Docker changed also the infrastructure world because we wouldn’t need any more big datacenter or big powerful servers to run our applications, but potentially we just need our laptops. Why? Because Docker has been installed on the host OS as a Container Manager where the OS (Linux Kernel) is shared with every container, in a flexible and lightweight manner.

In fact, if you think about Virtualisation (f.i. VMware) at that time, you needed much more infrastructure hardware, OS licensing per VM, and license for the Hypervisor, so in the end, much more money.

Here below is shown the Docker role at the infrastructure level:

Beyond “Borg” ?

What’s next then after the Docker release? The big tech companies they felt that there was a gap to fill in: nobody thought about how to orchestrate containers. So that become the next “gold rush” for the Big Tech.

In 2013, in fact, they were fighting each other for taking the market lead on the cloud computing technologies because at that time, AWS was casting its big shadow on all the other competitors like Microsoft and especially Google.

Google had been using a container orchestration system called Borg to manage their internal applications for over a decade. Borg was able to manage containers at an unprecedented scale and was a key factor in Google's ability to deliver reliable, scalable services.

Borg was able to manage containers at an unprecedented scale and was a key factor in Google's ability to deliver reliable, scalable services.

However, Borg was not designed to be used outside of Google and was not available to the wider community.

They saw an opportunity here, they felt that it’s the right thing to do riding the Docker wave of that moment and releasing a “Borg-like” software as an open source project.

So the development team in Mountain View, they quickly put together a rudimentary prototype to convince the User Requirements Specification team (URS) that it was the right time to develop Borg and release in the world.

They thought not only to develop the “Borg-like” system but all the orchestrator infrastructure around it as an open source project, which at that time was not considered a good idea from the URS team.

After back and forth finally they convinced them that creating this container orchestrator platform was the right thing for them.

So after the green light, now it’s time to get real and develop the right plan for the final approval.

At the newly created DockerCon 2014, Google announced that they release a product called “Kubernetes” (”pilot” in Greek).

The power of Open Source ?

As mentioned before, the Google URS team was unhappy about developing an open-source project because of course they would like to monetize their effort and values.

Also, at that time, there were already released on the market other open source container orchestrator platforms like Mesos (already adopted from Apple, Netflix, and Airbnb), and Docker Swarm, so Kubernetes entered as a new player in the market.

During the development phase, a few companies were involved directly from Google like Red Hat or CoreOS to collaborating on development, but Kubernetes' strength in part that it had a vast army of contributors behind it, a huge community, and it just kept marching along with a huge number of commits per day.

So since Kubernetes was born as an open-source project Google decided to formalize this entity and create an official community where companies can collaborate as a community for developing together their platforms around Kubernetes and define standards for that.

So when Google decided to present Kubernetes 1.0 at OSCON 2015, at the same time they announced the origin of Cloud Native Computing Foundation (CNCF), a community which will be charted to take Kubernetes and adjacent technologies, harmonize them and ultimately progress the agenda of cloud-native computing for everybody.

During this journey a lot of companies and start-ups were born with a product that works or integrates a Kubernetes feature, just mentioning a few Argo CD, Helm, and Prometheus.

The Kubernetes role in this wind of change ?️

Initially, Kubernetes was a standalone project that did not rely on Docker. Instead, it was designed to work with its own container runtime called Docker. However, as the popularity of Docker grew, Kubernetes was adapted to work with Docker containers as well.

Kubernetes is built on this concept called “promise theory”. Even though you have a lot of machines in your Kubernetes cluster, any of them can break at any time, but Kubernetes’ job is to make sure that application is always running.

So, one of the key benefits of Kubernetes is its powerful orchestration capabilities. Kubernetes is able to manage complex deployments with ease, handling tasks such as scaling, load balancing, and rolling updates. This makes it an ideal choice for managing “containerized” applications at scale.

Another benefit of Kubernetes is its ability to handle multiple types of workloads, including stateful and stateless applications. This means that Kubernetes can be used to manage a wide range of applications, from simple web applications to complex databases and data processing pipelines.

Conclusions

In essence, Docker and Kubernetes are two separate technologies that solve different problems. Docker is a platform for building, shipping, and running applications in containers, while Kubernetes is a powerful container orchestration system. While Kubernetes was initially designed to work with its own container runtime, it has since been adapted to work with Docker containers as well. Together, Docker and Kubernetes have played a significant role in the container revolution and have made it more accessible and manageable for organizations of all sizes.

What's Next?

Kubernetes is, for years now, the de-facto standard for the container orchestration platform, and a lot of companies already adopted it by now as a central service on their application deployment architecture.

At Hivemind Technologies we have huge experience in migrating monolith applications into Kubernetes cloud services, such as AWS and Azure, and transforming the processes for deploying your services with scalability, flexibility, and reliability.

Additionally, we can integrate several other platforms with Kubernetes for managing the Continuous Integration and Continuous Delivery (CI/CD) of application deployment, the operational state through the observability and tracing platform, and the cluster's compute resource footprint to reduce costs and improve performance.